I’m a casual coder but the above is pretty straightforward.

Except the extract step. This is tedious. It’s a few days of writing fiddly code to catch all the different ways that guests might be listed, or how show notes might be written.

OR:

It occurred to me… why not just give this to OpenAI’s GPT-3?

So that’s what I did. It took 20 minutes to write the integration, then I left the code running overnight. It costs me pennies per inference so I’ve replaced a few days of boring graft with $30 on my credit card.

And this is interesting right?

I’ve been used to thinking about generative AI and LLMs (language models) as smart autocomplete.

But this is more like a universal coupling.

Matt Webb, via Robin Sloan

As someone whose day job is largely “the extract step,” this is pretty interesting to me. I can’t be throwing my raw data at any cloud-based OpenAI tool, though — a lot of it contains identifying information or free-text fields that could contain identifying information — and my employer isn’t going to be spinning up a local instance of ChatGPT, I don’t think. Maybe they should?

My less inspired use case for an LLM (huggingface’s new OpenAssistant) was summarizing intervention research on juvenile detention alternatives. Unlike Matt Webb, I got absolute garbage. Maybe the key insight here is that, despite my fashionable skepticism, I’ve been taken in by the sparkle of premium mediocre prose just as badly as any OpenAI stan; if I’m serious about actually making use of these things, I need to look for applications that are closer to the metal. Which is a weird thing to think about an algorithm that famously can’t do arithmetic; but 2023 is nothing if not weird.

… that link to “premium mediocre” is worth reading in its entirety, by the way. And arguably the colonization of the “premium mediocre” tier in Venkat’s “American Class System 2017” pyramid is a pretty good descriptor of what we’re worried about ChatGPT doing; humanity can no longer just settle for doing better than APIs, we also have to compete with the automation of soulless corporate American prose and bad, unfunny impressions (click to page 4 and check out ChatGPT’s attempt at “the style of J. R. R. Tolkien,” which proves beyond a doubt that the whiz kids at Booz Allen are way too busy Thought Leading to actually read Tolkien).

Currently listening: DISAPPEARANCE AT DEVIL’S ROCK, by Paul Tremblay, read by Erin Bennett.

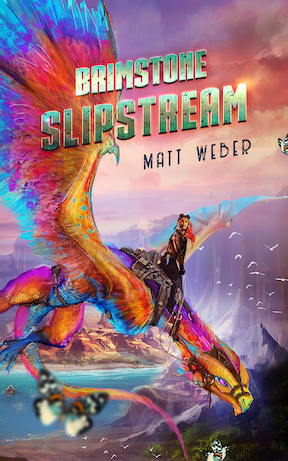

If you’re a fantasy reader in the market for a different twist on dragons, have a look at BRIMSTONE SLIPSTREAM, the opening novella in the Streets of Flame series — free to download on all the major retailers.

And ran across this about an hour (of DEAD CELLS and INSCRYPTION) after posting: https://twitter.com/danielschuman/status/1652125444718600193